I guess, it is no secret that testing plays a critical part in development of any software product. The more rigorous testing is, the better the final product will be. In this blog post we will be Testing Database Performance with tSQLt and SQLQueryStress.

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt1 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt1]()

There is a common practice whenthe software code testing is carried out quite meticulously, while the database testing is either skipped because of the lack of time, or carried out on leftovers. To be quite honest, things are even worse in real life: database is recalled only when there are actual problems with it already. Eventually, the work with database may become a real bottleneck in performance of your application.

To get rid of such problems, I suggest looking into various aspects of database testing, including load testing and performance testing of SQL Server in general by means of unit tests.

Let’s consider an abstract task. For instance, let’s imagine we are developing an engine for online shops. Our customers may have different volume of sales, product types… but to make it more straightforward, we will make the database structure maximally simple.

Database Structure

USE [master]

GO

IF DB_ID('db_sales') IS NOT NULL BEGIN

ALTER DATABASE [db_sales] SET SINGLE_USER WITH ROLLBACK IMMEDIATE

DROP DATABASE [db_sales]

END

GO

CREATE DATABASE [db_sales]

GO

USE [db_sales]

GO

CREATE TABLE dbo.Customers (

[CustomerID] INT IDENTITY PRIMARY KEY

, [FullName] NVARCHAR(150)

, [Email] VARCHAR(50) NOT NULL

, [Phone] VARCHAR(50)

)

GO

CREATE TABLE dbo.Products (

[ProductID] INT IDENTITY PRIMARY KEY

, [Name] NVARCHAR(150) NOT NULL

, [Price] MONEY NOT NULL CHECK (Price > 0)

, [Image] VARBINARY(MAX) NULL

, [Description] NVARCHAR(MAX)

)

GO

CREATE TABLE dbo.Orders (

[OrderID] INT IDENTITY PRIMARY KEY

, [CustomerID] INT NOT NULL

, [OrderDate] DATETIME NOT NULL DEFAULT GETDATE()

, [CustomerNotes] NVARCHAR(MAX)

, [IsProcessed] BIT NOT NULL DEFAULT 0

)

GO

ALTER TABLE dbo.Orders WITH NOCHECK

ADD CONSTRAINT FK_Orders_CustomerID FOREIGN KEY (CustomerID)

REFERENCES dbo.Customers (CustomerID)

GO

ALTER TABLE dbo.Orders CHECK CONSTRAINT FK_Orders_CustomerID

GO

CREATE TABLE dbo.OrderDetails

(

[OrderID] INT NOT NULL

, [ProductID] INT NOT NULL

, [Quantity] INT NOT NULL CHECK (Quantity > 0)

, PRIMARY KEY (OrderID, ProductID)

)

GO

ALTER TABLE dbo.OrderDetails WITH NOCHECK

ADD CONSTRAINT FK_OrderDetails_OrderID FOREIGN KEY (OrderID)

REFERENCES dbo.Orders (OrderID)

GO

ALTER TABLE dbo.OrderDetails CHECK CONSTRAINT FK_OrderDetails_OrderID

GO

ALTER TABLE dbo.OrderDetails WITH NOCHECK

ADD CONSTRAINT FK_OrderDetails_ProductID FOREIGN KEY (ProductID)

REFERENCES dbo.Products (ProductID)

GO

ALTER TABLE dbo.OrderDetails CHECK CONSTRAINT FK_OrderDetails_ProductID

GO

Let’s also suggest that our sophisticated client will work with the database with help of the preliminary written stored procedures. They all are pretty simple. The first procedure is for insertion of a new user or for getting ID of the existing one:

CREATE PROCEDURE dbo.GetCustomerID

(

@FullName NVARCHAR(150)

, @Email VARCHAR(50)

, @Phone VARCHAR(50)

, @CustomerID INT OUT

)

AS BEGIN

SET NOCOUNT ON;

SELECT @CustomerID = CustomerID

FROM dbo.Customers

WHERE Email = @Email

IF @CustomerID IS NULL BEGIN

INSERT INTO dbo.Customers (FullName, Email, Phone)

VALUES (@FullName, @Email, @Phone)

SET @CustomerID = SCOPE_IDENTITY()

END

END

The second procedure is for placing a new order:

CREATE PROCEDURE dbo.CreateOrder

(

@CustomerID INT

, @CustomerNotes NVARCHAR(MAX)

, @Products XML

)

AS BEGIN

SET NOCOUNT ON;

DECLARE @OrderID INT

INSERT INTO dbo.Orders (CustomerID, CustomerNotes)

VALUES (@CustomerID, @CustomerNotes)

SET @OrderID = SCOPE_IDENTITY()

INSERT INTO dbo.OrderDetails (OrderID, ProductID, Quantity)

SELECT @OrderID

, t.c.value('@ProductID', 'INT')

, t.c.value('@Quantity', 'INT')

FROM @Products.nodes('items/item') t(c)

END

Suppose, we need to ensure the minimal call during execution of queries. There will be hardly any performance issues with an empty database. Therefore, we need at least some data to test performance of our database. For this, let’s use the following script to generate test data for the Customers table:

DECLARE @obj INT = OBJECT_ID('dbo.Customers')

, @sql NVARCHAR(MAX)

, @cnt INT = 10

;WITH

E1(N) AS (

SELECT * FROM (

VALUES

(1),(1),(1),(1),(1),

(1),(1),(1),(1),(1)

) t(N)

),

E2(N) AS (SELECT 1 FROM E1 a, E1 b),

E4(N) AS (SELECT 1 FROM E2 a, E2 b),

E8(N) AS (SELECT 1 FROM E4 a, E4 b)

SELECT @sql = '

DELETE FROM ' + QUOTENAME(OBJECT_SCHEMA_NAME(@obj))

+ '.' + QUOTENAME(OBJECT_NAME(@obj)) + '

;WITH

E1(N) AS (

SELECT * FROM (

VALUES

(1),(1),(1),(1),(1),

(1),(1),(1),(1),(1)

) t(N)

),

E2(N) AS (SELECT 1 FROM E1 a, E1 b),

E4(N) AS (SELECT 1 FROM E2 a, E2 b),

E8(N) AS (SELECT 1 FROM E4 a, E4 b)

INSERT INTO ' + QUOTENAME(OBJECT_SCHEMA_NAME(@obj))

+ '.' + QUOTENAME(OBJECT_NAME(@obj)) + '(' +

STUFF((

SELECT ', ' + QUOTENAME(name)

FROM sys.columns c

WHERE c.[object_id] = @obj

AND c.is_identity = 0

AND c.is_computed = 0

FOR XML PATH(''), TYPE).value('.', 'NVARCHAR(MAX)'), 1, 2, '')

+ ')

SELECT TOP(' + CAST(@cnt AS VARCHAR(10)) + ') ' +

STUFF((

SELECT '

, ' + QUOTENAME(name) + ' = ' +

CASE

WHEN TYPE_NAME(c.system_type_id) IN (

'varchar', 'char', 'nvarchar',

'nchar', 'ntext', 'text'

)

THEN (

STUFF((

SELECT TOP(

CASE WHEN max_length = -1

THEN CAST(RAND() * 10000 AS INT)

ELSE max_length

END

/

CASE WHEN TYPE_NAME(c.system_type_id) IN ('nvarchar', 'nchar', 'ntext')

THEN 2

ELSE 1

END

) '+SUBSTRING(x, (ABS(CHECKSUM(NEWID())) % 80) + 1, 1)'

FROM E8

FOR XML PATH(''), TYPE).value('.', 'NVARCHAR(MAX)'), 1, 1, '')

)

WHEN TYPE_NAME(c.system_type_id) = 'tinyint'

THEN '50 + CRYPT_GEN_RANDOM(10) % 50'

WHEN TYPE_NAME(c.system_type_id) IN ('int', 'bigint', 'smallint')

THEN 'CRYPT_GEN_RANDOM(10) % 25000'

WHEN TYPE_NAME(c.system_type_id) = 'uniqueidentifier'

THEN 'NEWID()'

WHEN TYPE_NAME(c.system_type_id) IN ('decimal', 'float', 'money', 'smallmoney')

THEN 'ABS(CAST(NEWID() AS BINARY(6)) % 1000) * RAND()'

WHEN TYPE_NAME(c.system_type_id) IN ('datetime', 'smalldatetime', 'datetime2')

THEN 'DATEADD(MINUTE, RAND(CHECKSUM(NEWID()))

*

(1 + DATEDIFF(MINUTE, ''20000101'', GETDATE())), ''20000101'')'

WHEN TYPE_NAME(c.system_type_id) = 'bit'

THEN 'ABS(CHECKSUM(NEWID())) % 2'

WHEN TYPE_NAME(c.system_type_id) IN ('varbinary', 'image', 'binary')

THEN 'CRYPT_GEN_RANDOM(5)'

ELSE 'NULL'

END

FROM sys.columns c

WHERE c.[object_id] = @obj

AND c.is_identity = 0

AND c.is_computed = 0

FOR XML PATH(''), TYPE).value('.', 'NVARCHAR(MAX)'), 1, 8, '

')

+ '

FROM E8

CROSS APPLY (

SELECT x = ''0123456789-ABCDEFGHIJKLMNOPQRSTUVWXYZ abcdefghijklmnopqrstuvwxyz''

) t'

EXEC sys.sp_executesql @sql

The script was created for the purpose of generation of random test data for unstructured tables. As a result of this approach, we succeed in terms of versatility, but failed in terms of reality:

CustomerID FullName Email Phone

———– ———————————— —————- —————

1 uN9UiFZ9i0pALwQXIfC628Ecw35VX9L i6D0FNBuKo9I ZStNRH8t1As2S

2 Jdi6M0BqxhE-7NEvC1 a12 UTjK28OSpTHx 7DW2HEv0WtGN

3 0UjI9pIHoyeeCEGHHT6qa2 2hUpYxc vN mqLlO 7c R5 U3ha

4 RMH-8DKAmewi2WdrvvHLh w-FIa wrb uH

5 h76Zs-cAtdIpw0eewYoWcY2toIo g5pDTiTP1Tx qBzJw8Wqn

6 jGLexkEY28Qd-OmBoP8gn5OTc FESwE l CkgomDyhKXG

7 09X6HTDYzl6ydcdrYonCAn6qyumq9 EpCkxI01tMHcp eOh7IFh

8 LGdGeF5YuTcn2XkqXT-92 cxzqJ4Y cFZ8yfEkr

9 7 Ri5J30ZtyWBOiUaxf7MbEKqWSWEvym7 0C-A7 R74Yc KDRJXX hw

10 D DzeE1AxUHAX1Bv3eglY QsZdCzPN0 RU-0zVGmU

Of course, nobody stops us from wiring a script for generating more realistic data for the same Customers table:

DECLARE @cnt INT = 10

DELETE FROM dbo.Customers

;WITH

E1(N) AS (

SELECT * FROM (

VALUES

(1),(1),(1),(1),(1),

(1),(1),(1),(1),(1)

) t(N)

),

E2(N) AS (SELECT 1 FROM E1 a, E1 b),

E4(N) AS (SELECT 1 FROM E2 a, E2 b),

E8(N) AS (SELECT 1 FROM E4 a, E4 b)

INSERT INTO dbo.Customers (FullName, Email, Phone)

SELECT TOP(@cnt)

[FullName] = txt

, [Email] = LOWER(txt) + LEFT(ABS(CHECKSUM(NEWID())), 3) + '@gmail.com'

, [Phone] =

'+38 (' + LEFT(ABS(CHECKSUM(NEWID())), 3) + ') ' +

STUFF(STUFF(LEFT(ABS(CHECKSUM(NEWID())), 9)

, 4, 1, '-')

, 7, 1, '-')

FROM E8

CROSS APPLY (

SELECT TOP(CAST(RAND(N) * 10 AS INT)) txt

FROM (

VALUES

(N'Boris_the_Blade'),

(N'John'), (N'Steve'),

(N'Mike'), (N'Phil'),

(N'Sarah'), (N'Ann'),

(N'Andrey'), (N'Liz'),

(N'Stephanie')

) t(txt)

ORDER BY NEWID()

) t

Data has become more realistic:

FullName Email Phone

————— ————————– ——————-

Boris_the_Blade boris_the_blade1@gmail.com +38 (146) 296-33-10

John john130@mail.com +38 (882) 688-98-59

Phil phil155@gmail.com +38 (125) 451-73-71

Mike mike188@gmail.com +38 (111) 169-59-14

Sarah sarah144@gmail.com +38 (723) 124-50-60

Andrey andrey100@gmail.com +38 (193) 160-91-48

Stephanie stephanie188@gmail.com +38 (590) 128-86-02

John john723@gmail.com +38 (194) 101-06-65

Phil phil695@gmail.com +38 (164) 180-57-37

Mike mike200@gmail.com +38 (110) 131-89-45

However, we should not forget that we have foreign keys between tables, and generation of consistent data for the rest of instances is a way harder task. To avoid inventing a solution for this problem, I suggest using dbForge Data Generator for SQL Server that allows to generate meaningful test data for database tables.

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt2 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt2]()

SELECT TOP 10 *

FROM dbo.Customers

ORDER BY NEWID()

CustomerID FullName Email Phone

———– ————– ———————————– —————–

18319 Noe Pridgen Doyle@example.com (682) 219-7793

8797 Ligia Gaddy CrandallR9@nowhere.com (623) 144-6165

14712 Marry Almond Cloutier39@nowhere.com (601) 807-2247

8280 NULL Lawrence_Z_Mortensen85@nowhere.com (710) 442-3219

8012 Noah Tyler RickieHoman867@example.com (944) 032-0834

15355 Fonda Heard AlfonsoGarcia@example.com (416) 311-5605

10715 Colby Boyd Iola_Daily@example.com (718) 164-1227

14937 Carmen Benson Dennison471@nowhere.com (870) 106-6468

13059 Tracy Cornett DaniloBills@example.com (771) 946-5249

7092 Jon Conaway Joey.Redman844@example.com (623) 140-7543

Test data is ready. Now, let’s proceed to testing productivity of our stored procedures.

We have the GetCustomerID procedure that returns a client ID. If the ID does not exist, the procedure creates the corresponding record in the Customers table. Let’s try to execute it with the preliminary enabled view of the actual execution plan:

DECLARE @CustomerID INT

EXEC dbo.GetCustomerID @FullName = N'Сергей'

, @Email = 'sergeys@mail.ru'

, @Phone = '7105445'

, @CustomerID = @CustomerID OUT

SELECT @CustomerID

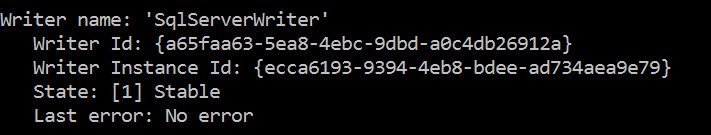

The plan shows that the full scan of the clustered index takes place:

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt3 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt3]()

To achieve this, SQL Server has to make 200 logical reads from the table and it takes approximately 20 milliseconds:

Table ‘Customers’. Scan count 1, logical reads 200, physical reads 0, …

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 20 ms.

And here we are talking about execution of one single query. What if our stored procedure will be executed very actively? The constant index scanning will reduce the server productivity.

Let’s try to execute the stress testing of our stored procedure with one interesting open-source tool, SQLQueryStress (link to GitHub).

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt4 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt4]()

We can see that the 2 thousand calls of the GetCustomerID procedure in two streams took a little less than 4 seconds. Now, let’s see what will happen if we add an index to the field that is involved in our search:

CREATE NONCLUSTERED INDEX IX_Email ON dbo.Customers (Email)

The execution plan shows that Index Scan has been replaced with Index Seek:

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt5 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt5]()

The logical reads and total execution time have been reduced:

Table ‘Customers’. Scan count 1, logical reads 2, …

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 8 ms.

If we repeat our stress test in SQLQueryStress, we will see that our procedure loads server less and is executed faster during the multiple call:

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt6 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt6]()

Now, let’s try to emulate the mass order placement with SQLQueryStress:

DECLARE @CustomerID INT

, @CustomerNotes NVARCHAR(MAX)

, @Products XML

SELECT TOP(1) @CustomerID = CustomerID

, @CustomerNotes = REPLICATE('a', RAND() * 100)

FROM dbo.Customers

ORDER BY NEWID()

SELECT @Products = (

SELECT [@ProductID] = ProductID

, [@Quantity] = CAST(RAND() * 10 AS INT)

FROM dbo.Products

ORDER BY ProductID

OFFSET CAST(RAND() * 1000 AS INT) ROWS

FETCH NEXT CAST(RAND() * 10 AS INT) + 1 ROWS ONLY

FOR XML PATH('item'), ROOT('items')

)

EXEC dbo.CreateOrder @CustomerID = @CustomerID

, @CustomerNotes = @CustomerNotes

, @Products = @Products

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt7 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt7]()

100 executions of the procedure in two streams simultaneously took 2.5 seconds. Let’s clear the waits statistics:

DBCC SQLPERF("sys.dm_os_wait_stats", CLEAR)

We can see that the first place is occupied by WRITELOG, the wait time of which approximately corresponds to the total execution time of our stress test. What does this wait mean? Since each insert command is atomic, the physical fixation of changes in log takes place after its execution. When there are lots of short transactions, the queue appears, because log operations take place simultaneously, unlike data files.

In SQL Server 2014, the ability to setup writeback to the Delayed Durability log was introduced, which can be enabled on the database level:

ALTER DATABASE db_sales SET DELAYED_DURABILITY = ALLOWED

Next, we need to just alter the stored procedure:

ALTER PROCEDURE dbo.CreateOrder

(

@CustomerID INT

, @CustomerNotes NVARCHAR(MAX)

, @Products XML

)

AS BEGIN

SET NOCOUNT ON;

BEGIN TRANSACTION t

DECLARE @OrderID INT

INSERT INTO dbo.Orders (CustomerID, CustomerNotes)

VALUES (@CustomerID, @CustomerNotes)

SET @OrderID = SCOPE_IDENTITY()

INSERT INTO dbo.OrderDetails (OrderID, ProductID, Quantity)

SELECT @OrderID

, t.c.value('@ProductID', 'INT')

, t.c.value('@Quantity', 'INT')

FROM @Products.nodes('items/item') t(c)

COMMIT TRANSACTION t WITH (DELAYED_DURABILITY = ON)

END

Let’s clear statistics and run stress test for the second time:

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt8 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt8]()

The total execution time has been reduced in two times, and the WRITELOG waits have become minimal:

wait_type wait_time

————————– ———-

PREEMPTIVE_OS_WRITEFILE 0.027000

PAGEIOLATCH_EX 0.024000

PAGELATCH_EX 0.020000

WRITELOG 0.014000

Let’s take a look at another situation, when periodic check of the execution productivity of one or another query is required. SQLQueryStress is not that convenient tool for this goal, since we have to open the app, copy the query and wait for its execution.

EXEC sys.sp_configure 'clr enabled', 1

RECONFIGURE

GO

ALTER DATABASE [db_sales] SET TRUSTWORTHY ON

GO

Can we automate this somehow?

In 2014, I tried out tSQLt for the first time, which turned out to be quite a nice free framework for unit testing. Let’s try to install tSQLt and create an autotest for checking the productivity of our stored procedure.

Let’s download the latest version of tSQLt and set up an SQL Server instance for the work with CLR:

After this, let’s execute the tSQLt.class.sql script from the archive against our database. The script creates its own tSQLt schema, CLR assembly and lots of script objects. A part of the procedures which are supposed for internal framework usage will contain the Private_ prefix.

If everything has been installed successfully, we’ll see the following message in the Output:

+—————————————–+

| |

| Thank you for using tSQLt. |

| |

| tSQLt Version: 1.0.5873.27393 |

| |

+—————————————–+

Now, let’s create a schema in which we will create autotests:

USE [db_sales]

GO

CREATE SCHEMA [Performance]

GO

EXEC sys.sp_addextendedproperty @name = N'tSQLt.Performance'

, @value = 1

, @level0type = N'SCHEMA'

, @level0name = N'Performance'

GO

Note, that Extended Property defines the relation of one or another object to the tSQLt functionality.

Let’s create a test in the Performance schema and specify the test prefix in its name:

CREATE PROCEDURE [Performance].[test ProcTimeExecution]

AS BEGIN

SET NOCOUNT ON;

EXEC tSQLt.Fail 'TODO: Implement this test.'

END

Let’s try to execute the created autotest. For this, we can either execute

EXEC tSQLt.RunAll

…or specify schema explicitly:

EXEC tSQLt.Run 'Performance'

, or a certain test:

EXEC tSQLt.Run 'Performance.test ProcTimeExecution'

If it is required to tun the lately executed test, we can call Run without parameters:

EXEC tSQLt.Run

After execution of one of the above commands, we will get the following information:

[Performance].[test ProcTimeExecution] failed: (Failure) TODO: Implement this test.

+———————-+

|Test Execution Summary|

+———————-+

|No|Test Case Name |Dur(ms)|Result |

+–+————————————–+——-+——-+

|1 |[Performance].[test ProcTimeExecution]| 0|Failure|

Let’s try to modify the contents of the autotest to something useful. For instance, let’s take the GetUnprocessedOrders procedure that returns the list of unprocessed orders:

CREATE PROCEDURE dbo.GetUnprocessedOrders

AS BEGIN

SET NOCOUNT ON;

SELECT

o.OrderID

, o.OrderDate

, c.FullName

, c.Email

, c.Phone

, OrderSum = (

SELECT SUM(p.Price + d.Quantity)

FROM dbo.OrderDetails d

JOIN dbo.Products p ON d.ProductID = p.ProductID

WHERE d.OrderID = o.OrderID

)

FROM dbo.Orders o

JOIN dbo.Customers c ON o.CustomerID = c.CustomerID

WHERE o.IsProcessed = 0

END

…and create an autotest that will execute the procedure for a certain number of times and will end with error, if the average execution time is more than the specified threshold value.

ALTER PROCEDURE [Performance].[test ProcTimeExecution]

AS BEGIN

SET NOCOUNT ON;

DECLARE @time DATETIME

, @duration BIGINT = 0

, @cnt TINYINT = 10

WHILE @cnt > 0 BEGIN

SET @time = GETDATE()

EXEC dbo.GetUnprocessedOrders

SET @duration += DATEDIFF(MILLISECOND, @time, GETDATE())

SET @cnt -= 1

END

IF @duration / 10 > 100 BEGIN

DECLARE @txt NVARCHAR(MAX) = 'High average execution time: '

+ CAST(@duration / 10 AS NVARCHAR(10)) + ' ms'

EXEC tSQLt.Fail @txt

END

END

Let’s execute the autotest:

EXEC tSQLt.Run 'Performance'

We receive the following message:

[Performance].[test ProcTimeExecution] failed: (Error) High execution time: 161 ms

+———————-+

|Test Execution Summary|

+———————-+

|No|Test Case Name |D ur(ms)|Result|

+–+————————————–+——-+——+

|1 |[Performance].[test ProcTimeExecution]| 1620|Error |

Let’s try to optimize the query to make the test pass. First, let’s take a look at the execution plan:

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt9 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt9]()

As we see, the problem is in the frequent call to the clustered index of the Products table. A large number or logical reads also confirms this statement:

Table ‘Customers’. Scan count 1, logical reads 200, …

Table ‘Orders’. Scan count 1, logical reads 3886, …

Table ‘Products’. Scan count 0, logical reads 73607, …

Table ‘OrderDetails’. Scan count 1, logical reads 235, …

How can we fix the situation? Well, we can either add a non-clusted index, include the Price field to it, make a pre-estimate of values in a separate table. Alternatively, we can create an aggregate index view:

CREATE VIEW dbo.vwOrderSum

WITH SCHEMABINDING

AS

SELECT d.OrderID

, OrderSum = SUM(p.Price + d.Quantity)

, OrderCount = COUNT_BIG(*)

FROM dbo.OrderDetails d

JOIN dbo.Products p ON d.ProductID = p.ProductID

GROUP BY d.OrderID

GO

CREATE UNIQUE CLUSTERED INDEX IX_OrderSum

ON dbo.vwOrderSum (OrderID)

...and modify the procedure:

ALTER PROCEDURE dbo.GetUnprocessedOrders

AS BEGIN

SET NOCOUNT ON;

SELECT

o.OrderID

, o.OrderDate

, c.FullName

, c.Email

, c.Phone

, s.OrderSum

FROM dbo.Orders o

JOIN dbo.Customers c ON o.CustomerID = c.CustomerID

JOIN dbo.vwOrderSum s WITH(NOEXPAND) ON o.OrderID = s.OrderID

WHERE o.IsProcessed = 0

END

It’s better to specify the NOEXPAND hint to make the optimizer execute the index from our view. Besides, we can create a new filtered index to minimize the quantity of logical reads from Orders:

CREATE NONCLUSTERED INDEX IX_UnProcessedOrders

ON dbo.Orders (OrderID, CustomerID, OrderDate)

WHERE IsProcessed = 0

Now, a more simple plan is used during execution of our stored procedure:

![SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt10 SQL SERVER - Testing Database Performance with tSQLt and SQLQueryStress tsqlt10]()

The number of logical reads has decreased as well:

Table ‘Customers’. Scan count 1, logical reads 200, …

Table ‘Orders’. Scan count 1, logical reads 21, …

Table ‘vwOrderSum’. Scan count 1, logical reads 44, …

Execution of the stored procedure has been also minimized, and our test will be executed successfully:

|No|Test Case Name |Dur(ms)|Result |

+–+————————————–+——-+——-+

|1 |[Performance].[test ProcTimeExecution]| 860|Success|

We can hug ourselves. We have optimized all bottlenecks and made a really cool product. But let’s be sincere with ourselves. Data tend to mount up, and SQL Server generates execution plan on the basis of the expected number of lines. We have performed testing with perspective for the future, but there’ s no guarantee that somebody won’t delete the required index and so on. That’s why, it is highly important to run similar autotests on a regular basis in order to influence problems timely.

Now, let’s see what else we can do with unit tests.

For instance, we can check all execution plans for the MissingIndexGroup section. And if the section exists, SQL Server considers that a certain query lacks index:

CREATE PROCEDURE [Performance].[test MissingIndexes]

AS BEGIN

SET NOCOUNT ON

DECLARE @msg NVARCHAR(MAX)

, @rn INT

SELECT t.text

, p.query_plan

, q.total_worker_time / 100000.

FROM (

SELECT TOP 100 *

FROM sys.dm_exec_query_stats

ORDER BY total_worker_time DESC

) q

CROSS APPLY sys.dm_exec_sql_text(q.sql_handle) t

CROSS APPLY sys.dm_exec_query_plan(q.plan_handle) p

WHERE p.query_plan.exist('//*:MissingIndexGroup') = 1

SET @rn = @@ROWCOUNT

IF @rn > 0 BEGIN

SET @msg = 'Missing index in ' + CAST(@rn AS VARCHAR(10)) + ' queries'

EXEC tSQLt.Fail @msg

END

END

Also, we can automate search of the unused indexes. It’s quite simple – you just need to know statics of usage of one or another index in dm_db_index_usage_stats:

CREATE PROCEDURE [Performance].[test UnusedUndexes]

AS BEGIN

DECLARE @tables INT

, @indexes INT

, @msg NVARCHAR(MAX)

SELECT @indexes = COUNT(*)

, @tables = COUNT(DISTINCT o.[object_id])

FROM sys.objects o

CROSS APPLY (

SELECT s.index_id

, index_usage = s.user_scans + s.user_lookups + s.user_seeks

, usage_percent =

(s.user_scans + s.user_lookups + s.user_seeks) * 100.

/

NULLIF(SUM(s.user_scans + s.user_lookups + s.user_seeks) OVER (), 0)

, index_count = COUNT(*) OVER ()

FROM sys.dm_db_index_usage_stats s

WHERE s.database_id = DB_ID()

AND s.[object_id] = o.[object_id]

) t

WHERE o.is_ms_shipped = 0

AND o.[schema_id] != SCHEMA_ID('tSQLt')

AND o.[type] = 'U'

AND (

(t.usage_percent < 5 AND t.index_usage > 100 AND t.index_count > 1)

OR

t.index_usage = 0

)

IF @tables > 0 BEGIN

SET @msg = 'Database contains ' + CAST(@indexes AS VARCHAR(10))

+ ' unused indexes in ' + CAST(@tables AS VARCHAR(10)) + ' tables'

EXEC tSQLt.Fail @msg

END

END

When developing large and complicated systems, it is a frequent case when a table can be created, filled with data and then forgotten for good.

So, how can such table be determined? For example, there are no references to such tables, and selection from these tables has not taken place since the start of the server, given that the server is working for more than a week. The conditions are relative, and should be adopted for each specific case.

CREATE PROCEDURE [Performance].[test UnusedTables]

AS BEGIN

SET NOCOUNT ON

DECLARE @msg NVARCHAR(MAX)

, @rn INT

, @txt NVARCHAR(1000) = N'Starting up database ''' + DB_NAME() + '''.'

DECLARE @database_start TABLE (

log_date SMALLDATETIME,

spid VARCHAR(50),

msg NVARCHAR(4000)

)

INSERT INTO @database_start

EXEC sys.xp_readerrorlog 0, 1, @txt

SELECT o.[object_id]

, [object_name] = SCHEMA_NAME(o.[schema_id]) + '.' + o.name

FROM sys.objects o

WHERE o.[type] = 'U'

AND o.is_ms_shipped = 0

AND o.[schema_id] != SCHEMA_ID('tSQLt')

AND NOT EXISTS(

SELECT *

FROM sys.dm_db_index_usage_stats s

WHERE s.database_id = DB_ID()

AND s.[object_id] = o.[object_id]

AND (

s.user_seeks > 0

OR s.user_scans > 0

OR s.user_lookups > 0

OR s.user_updates > 0

)

)

AND NOT EXISTS(

SELECT *

FROM sys.sql_expression_dependencies s

WHERE o.[object_id] IN (s.referencing_id, s.referenced_id)

)

AND EXISTS(

SELECT 1

FROM @database_start t

HAVING MAX(t.log_date) < DATEADD(DAY, -7, GETDATE()) ) SET @rn = @@ROWCOUNT IF @rn > 0 BEGIN

SET @msg = 'Database contains ' + CAST(@rn AS VARCHAR(10)) + ' unused tables'

EXEC tSQLt.Fail @msg

END

END

I can create yet more tests similar to the above one)

To sum up, I can recommend trying out tSQLt and SQLQueryStress without any scruples: these products are completely free and have proved to be really useful during heavy load testing of SQL Server and optimization of server productivity.

Speaking of the unit testing software, I would also like to mention dbForge Unit Test – a convenient add-in from Devart for automated unit testing in SQL Server Management Studio. The tool delivers high-quality unit testing and cuts down time spent on the process. Besides, Devart offers a free fully functional trial for the product for 30 days.

Reference: Pinal Dave (http://blog.sqlauthority.com)

First appeared on SQL SERVER – Testing Database Performance with tSQLt and SQLQueryStress

![SQL SERVER - Clustered Instance Online Error - SQL Server Network Interfaces: Error Locating Server/Instance Specified [xFFFFFFFF] sql-clus-01-800x241 SQL SERVER - Clustered Instance Online Error - SQL Server Network Interfaces: Error Locating Server/Instance Specified [xFFFFFFFF] sql-clus-01-800x241](http://blog.sqlauthority.com/wp-content/uploads/2016/11/sql-clus-01-800x241.jpg)

As you might have seen, along with performance tuning related work, I also help community in getting answers to questions and troubleshoot issue with them. A few days ago, one of community leader and user group champion contacted me for assistance. He informed me that they are using Microsoft Access Database Engine 2010 provider to read data from an excel file. But they are seeing below error while doing test connection. Let us learn about error related to OLE DB provider.

As you might have seen, along with performance tuning related work, I also help community in getting answers to questions and troubleshoot issue with them. A few days ago, one of community leader and user group champion contacted me for assistance. He informed me that they are using Microsoft Access Database Engine 2010 provider to read data from an excel file. But they are seeing below error while doing test connection. Let us learn about error related to OLE DB provider.

Working with new data types is something we need to evolve. In this blog, I have written on a number of articles on JSON which you can take a look at. I wanted to write about some of the interesting errors one will get when using JSON custom paths. This is something I stumbled accidentally when using JSON constructs. Let us see how to fix error 13603 while working with JSON documents.

Working with new data types is something we need to evolve. In this blog, I have written on a number of articles on JSON which you can take a look at. I wanted to write about some of the interesting errors one will get when using JSON custom paths. This is something I stumbled accidentally when using JSON constructs. Let us see how to fix error 13603 while working with JSON documents.![SQL SERVER - Installation Error - [Microsoft][ODBC Driver Manager] Data source name not found and no default driver specified setup-data-source-01 SQL SERVER - Installation Error - [Microsoft][ODBC Driver Manager] Data source name not found and no default driver specified setup-data-source-01](http://blog.sqlauthority.com/wp-content/uploads/2016/12/setup-data-source-01.jpg)

![SQL SERVER - Unable to Start SQL Service - Server TCP provider failed to listen on ['any' <ipv4> 1433]. Tcp port is already in use. port-use-01-800x213 SQL SERVER - Unable to Start SQL Service - Server TCP provider failed to listen on ['any' <ipv4> 1433]. Tcp port is already in use. port-use-01-800x213](http://blog.sqlauthority.com/wp-content/uploads/2017/01/port-use-01-800x213.jpg)